As a database administrator, you

may be in charge of multiple databases.

But often times, it’s the multi-server/instance burden that becomes the

most “click” intensive. What I mean by

that is you have to connect to multiple servers and multiple instances, or in

SSMS connect your Object Explorer to a bunch of different instances, and before

you know it you have a spaghetti cluster of instances on your desktop. You end up spending 90% of the time just

mis-clicking the wrong one, or worse, executing T-SQL against an unintended

instance.

Sometimes, the above scenario is

necessary. But for much

object/database/instance/server administration, this can be carried out with

PBM (Policy-Based Management) with the combination of CMS (Central Management

Servers). This central location allows

for the convenient method for do many tasks, and this blog post will focus on

PBM with CMS.

Policy-Based Management in and

of itself is a great addition with SQL Server 2008. But when you have dozens (or even hundreds)

of instances that require a standard set of policies and conditions, manually

pushing them out and evaluating them would be absurd (and let’s be honest…we

should be automating at every possible junction).

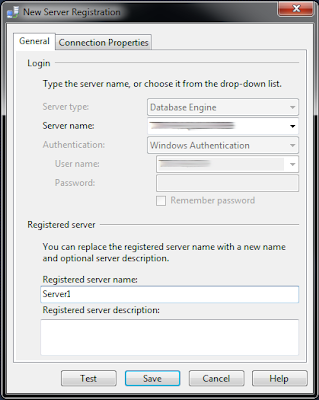

The first step to utilizing PBM

with CMS is registering a Central Management Server: Through SSMS in the Registered Servers

section, right-click on Central

Management Servers and select Register

Central Management Server…. Select a

SQL Server 2008 or higher instance to act as the CMS:

To logically group your servers,

I recommend creating a Server Group: Right-click on the CMS and select New Server Group…. Name the Server Group to your desire. I will be calling mine “2008R2” as this

Server Group will house my 2008 R2 instances, and “2012” to contain my 2012

instances:

Now I want to register a server

in my 2008R2 Server Group:

My Registered Servers pane

should look like the below now. Mind

you, there are only a couple of instances here and this is a very over-simplified implementation of

CMS:

There are a couple of ways to

approach PBM with CMS: 1) Create a

Policy on the Central Management Server and use that to evaluate against

managed servers, or 2) create a Policy and export/import to the managed servers. I will be focusing on the former method.

Go to the CMS and create a

Policy. Because this is not an in-depth

look at the extreme flexibility and power of PBM, I will just use an MS-shipped

out-of-the-box Best Practices Policy to import as an XML file. In the Object Explorer of my CMS I will go

into Management, Policy Management. Right-click on Policies and select Import

Policy…. Navigate to the desired

Policy File (XML file format) and select it.

I chose the famous Database Auto Close Policy to work with:

Now that I have a Policy created

on my CMS, so I will not worry about duplicating this effort and

exporting/importing into my registered servers.

Back at the Registered Servers SSMS pane, I want to be able to evaluate

this Policy against all of the servers registered under my CMS named

“CMS”. I simply right-click on the CMS

and select Evaluate Policies…. Now I will need to select the source. In this case, my CMS will house all of the

Policies, so I’ll choose this server.

Select the Policy(ies) that you want to evaluate against the registered

servers, and click Evaluate. You should be staring at your evaluation

results for the registered servers in the scope of where you selected to

evaluate policies:

And there it is! We have just used the administrative power of

Policy-Based Management, and ease of Central Management Servers to evaluate a

Policy against a subset of registered servers.

If there are any comments, questions, or issues please feel free to

leave a comment or email me at sqlsalt@gmail.com.